df.coalesce(1).write.format("").option("header", "true").option("delimiter", "\t").option("compression", "gzip").save("dbfs:/FileStore/df/fl_insurance_sample.csv") You can chain these into a single line as such. option("encoding", "utf-8"): By default set to utf-8. option("escape", "escape_char"): Escape specific characters. option("nullValue", "replacement_value"): Replace null values with the value you define. option("compression", "gzip"): Compress files using GZIP to reduce the file size. If this is the case, try a different character, such as "|". option("delimiter", "your_delimiter"): Define a custom delimiter if, for example, existing commas in your dataset are causing problems. Other options that you may find useful are: It tells Databricks to include the headers of your table in the CSV export, without which, who knows what’s what? option("header", "true") is also optional, but can save you some headache. coalesce(1) forces Databricks to write all your data into one file (Note: This is completely optional).coalesce(1) will save you the hassle of combining your data later, though it can potentially lead to unwieldy file size. If your dataset is large enough, Databricks will want to split it across multiple files. # Storing the data in one CSV on the alesce(1).write.format("").option("header", "true").save("dbfs:/FileStore/df/fl_insurance_sample.csv") # Loading a table called fl_insurance_sample into the variable dfdf = spark.table('fl_insurance_sample')

#Phraseexpress export as csv file code

If you have more than 1 million rows, you’re going to need the code below instead. If you have less than 1 million rows, you’re all set! 🎉 Pat yourself on the back and go get another coffee.

#Phraseexpress export as csv file full

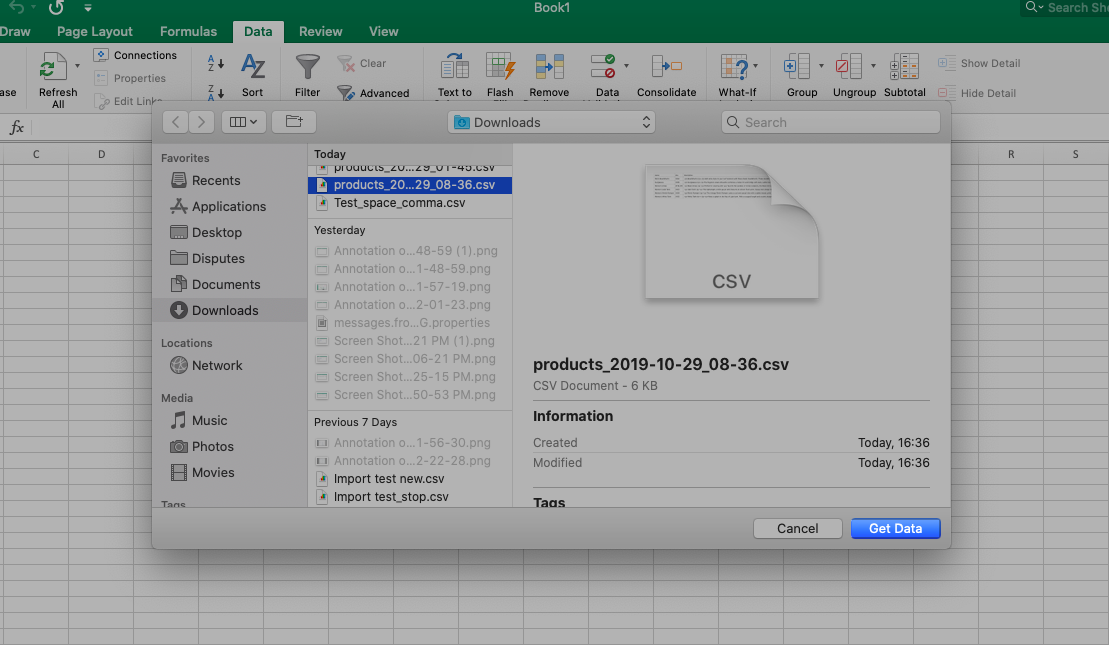

If you click the arrow, you'll get a small dropdown menu with the option to download the full dataset. Underneath the preview, you'll see a download button, with an arrow to the right.

# Displaying a preview of the data display(df.select("*")) The first (and easier) method goes like this.

0 kommentar(er)

0 kommentar(er)